Tuesday, October 31, 2023

The Art and Significance of Commemorative Coins

Monday, October 30, 2023

US Quarters Silver Years

Definition of the term "Silver Stacking"

1. Wealth preservation: Many individuals view silver as a store of value and a hedge against economic instability, inflation, and currency devaluation. By accumulating physical silver, they aim to protect their wealth from the eroding effects of inflation.

2. Investment: Some silver stackers consider silver to be a tangible investment. They hope that the value of their silver holdings will appreciate over time, allowing them to profit when they sell it at a higher price.

3. Portfolio diversification: Silver stacking is seen as a way to diversify one's investment portfolio, reducing risk by adding an asset class that behaves differently from stocks, bonds, and other investments.

4. Barter and trade: In extreme economic scenarios, physical silver may be used for barter and trade purposes, as it has been throughout history.

Silver stacking is often associated with the broader community of precious metals enthusiasts and is sometimes linked with "gold stacking," where individuals accumulate physical gold for similar reasons. The appeal of silver stacking varies from person to person, but it generally revolves around the belief that physical silver has intrinsic value and serves as a safeguard against financial uncertainty.

Sunday, October 29, 2023

US Coins Mint Marks

Here are some of the commonly used mint marks on US coins:

1. "P" - Philadelphia Mint: Coins minted at the Philadelphia Mint traditionally did not have a mint mark. However, starting in 1980, the mint began using a "P" mint mark on some coin denominations.

2. "D" - Denver Mint: The "D" mint mark represents coins produced at the Denver Mint, which is one of the active minting facilities in the United States.

3. "S" - San Francisco Mint: The "S" mint mark indicates coins struck at the San Francisco Mint. This mint primarily produces proof coins and commemorative issues.=

4. "W" - West Point Mint: The West Point Mint in New York began using the "W" mint mark on some bullion and commemorative coins. It is well-known for producing American Eagle gold and silver bullion coins.

5. "CC" - Carson City Mint: The Carson City Mint, which was operational in the 19th century, used the "CC" mint mark on various coins, such as Morgan Silver Dollars. These are now considered highly collectible due to their historical significance.

6. "O" - New Orleans Mint: The New Orleans Mint, which operated in the 19th century, used the "O" mint mark on coins like the Morgan Silver Dollar and the Seated Liberty Dollar.

7. "C" - Charlotte Mint and "D" - Dahlonega Mint: These mint marks were used in the 19th century to denote coins produced at the Charlotte Mint in North Carolina and the Dahlonega Mint in Georgia.

8. "CM" - Charlotte Mint Gold: This unique mint mark is found on certain rare gold coins produced at the Charlotte Mint.

9. "G" - Manila Mint: The "G" mint mark was used for coins produced at the United States Mint in Manila, Philippines, which operated during the early 20th century.

These mint marks help numismatists and collectors identify the origin of coins and, in some cases, the specific facility where they were minted. Mint marks can be an essential factor in determining a coin's rarity and value, as coins from certain mints or with particular mint marks may be scarcer than others.

Saturday, October 28, 2023

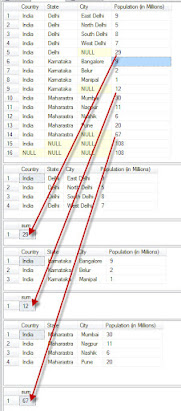

SubTotals with SQL using ROLLUP

To create subtotals in SQL using the ROLLUP operator, you can follow a similar approach to the previous example, but this time, you can include the subtotals explicitly in the result using a CASE statement to identify the subtotal rows. Here's an example:

Assuming you have a table named `Sales` with columns `ProductCategory`, `ProductSubCategory`, and `Revenue`, and you want to calculate the sum of revenue at different hierarchy levels (Category and Subcategory) and include subtotals:

```sql

CASE

WHEN GROUPING(ProductCategory) = 1 THEN 'Total Category'

WHEN GROUPING(ProductSubCategory) = 1 THEN 'Total Subcategory'

ELSE ProductCategory

END AS ProductCategory,

CASE

WHEN GROUPING(ProductSubCategory) = 1 THEN NULL

ELSE ProductSubCategory

END AS ProductSubCategory,

SUM(Revenue) AS TotalRevenue

FROM

Sales

GROUP BY

ROLLUP (ProductCategory, ProductSubCategory)

ORDER BY

GROUPING(ProductCategory),

GROUPING(ProductSubCategory),

ProductCategory,

ProductSubCategory;

```

In this query:

- The `CASE` statements are used to determine whether a row represents a subtotal. If `GROUPING(ProductCategory) = 1`, it's a subtotal at the category level, and if `GROUPING(ProductSubCategory) = 1`, it's a subtotal at the subcategory level. Otherwise, it's a regular row.

- The `ORDER BY` clause is used to sort the result so that subtotal rows come before the detailed rows, and within subtotals, subcategory subtotals come before category subtotals.

Here's an example of what the result might look like:

In this result, you can see the subtotals at both the category and subcategory levels, and they are explicitly labeled as "Total Category" and "Total Subcategory."

You can adjust the query and columns as needed to fit your specific data and hierarchy structure. This approach allows you to create subtotals in your SQL query using the ROLLUP operator.

Friday, October 27, 2023

What influences the price of silver?

Jim Fixx

Do you go for a 'run' now and then?

You should know Jim Fixx.

Jim Fixx was an American author and runner who is best known for popularizing the sport of running and jogging in the United States. He was born on April 23, 1932, and died on July 20, 1984.Jim Fixx's most notable contribution to the fitness and running world was his 1977 book titled "The Complete Book of Running." This book played a significant role in promoting the idea of running as a means of improving physical fitness and overall health. Fixx's work helped to dispel the notion that running was harmful and contributed to the growth of the running and jogging movement during the 1970s and 1980s.

Tragically, Jim Fixx died of a heart attack while out for his daily run in 1984 at the age of 52. His sudden death drew attention to the fact that even people who appeared to be in good physical condition could still be at risk for heart disease. Fixx's passing prompted discussions about the importance of overall health and well-rounded fitness, including cardiovascular health and diet.

Despite his untimely death, Jim Fixx's legacy lives on through his contributions to the popularization of running and the importance of physical fitness. His work has inspired countless individuals to take up running and adopt healthier lifestyles.

Thursday, October 26, 2023

The Essence of Integrity: A Pillar of Moral Character

Wednesday, October 25, 2023

Mainframe Modernization

1. Rehosting (Lift and Shift): In this pattern, the mainframe application is moved to a different platform without making significant changes to the code. It often involves moving to a cloud-based infrastructure or a more modern on-premises system. This approach can be relatively quick but may not take full advantage of modern capabilities.

2. Replatforming (Lift and Reshape): Replatforming involves moving the mainframe application to a new platform, such as a containerized environment or a serverless architecture, with minimal code changes. This approach allows for better scalability, performance, and cost-efficiency while maintaining the core application's functionality.

3. Refactoring (Code Transformation): Refactoring involves restructuring the mainframe code to be more modular and compatible with modern programming languages and architectures. It may involve breaking monolithic applications into microservices or redesigning the user interface for modern web and mobile platforms.

4. Rewriting: In some cases, a complete rewrite of the mainframe application is necessary. This pattern involves recreating the application using modern technologies and frameworks while preserving the business logic and data. It offers the opportunity to redesign the system with current best practices and technologies.

5. Service-Oriented Architecture (SOA) and APIs: This pattern involves exposing mainframe functionality as services or APIs. By doing this, other systems and applications can interact with the mainframe in a more standardized and efficient way. It's a step towards creating a more flexible and interconnected ecosystem.

6. Data Migration and Integration: Modernization efforts often involve migrating data from the mainframe to new databases or data storage solutions. Data integration technologies and techniques play a crucial role in ensuring data consistency and accessibility.

7. DevOps and Continuous Integration/Continuous Deployment (CI/CD): Implementing DevOps practices and CI/CD pipelines can help streamline the modernization process, making it easier to develop, test, and deploy changes to the mainframe applications.

8. Legacy Extension: In some cases, the mainframe system may not be entirely replaced. Instead, it's extended to work alongside new technologies. This allows for a gradual transition and coexistence of legacy and modern systems.

9. Cloud Adoption: Leveraging cloud services can provide scalability, flexibility, and cost savings. Modernization efforts may include migrating to a cloud-based infrastructure and utilizing cloud-native services.

10. Microservices and Containerization: Breaking down monolithic mainframe applications into smaller, manageable microservices and containerizing them can make the system more agile, scalable, and easier to maintain.

11. User Interface Modernization: Improving the user interface is often a key component of mainframe modernization. Legacy green-screen interfaces can be replaced with modern web or mobile interfaces, enhancing the user experience.

12. Legacy Application Integration: Modernizing mainframe systems doesn't necessarily mean replacing them entirely. Integration with newer technologies and systems can allow for a gradual transition and coexistence of legacy and modern components.

Choosing the right modernization pattern depends on the specific needs and constraints of the organization, the nature of the mainframe application, and the desired outcomes. It often involves a combination of these patterns and a well-thought-out modernization strategy.

Mainframe Modernization Patterns (such as Strangler Fig)

The Strangler Fig pattern is a specific modernization approach that can be used when dealing with legacy systems, including mainframes. It is named after the Strangler Fig tree, which starts as a vine and eventually envelops and replaces the host tree. In the context of modernization, the Strangler Fig pattern involves gradually replacing or rebuilding parts of a legacy mainframe system while leaving the core system in place. Here's how it works:

1. Identify Functional Modules: Identify specific functional modules or components within the mainframe application that you want to modernize or replace. These could be parts of the system that are outdated, causing performance issues, or are in need of new features.

2. Develop New Components: Develop new, modern components or services to replace the identified modules. These components are typically built using contemporary technologies and best practices. They are designed to fulfill the same functionality as the old modules but with improved performance, scalability, and flexibility.

3. Integration Layer: Create an integration layer that connects the new components to the existing mainframe system. This layer facilitates communication between the old and new parts of the application. Common approaches include using APIs, microservices, or data integration technologies.

4. Gradual Replacement: Over time, start replacing the identified modules or features in the legacy system with the new components. This can be done incrementally, feature by feature, or module by module. The legacy system gradually gets "strangled" as more and more of its functionality is taken over by the modern components.

5. Testing and Validation: Thoroughly test the integrated system to ensure that it functions correctly and meets business requirements. This includes validating data consistency and ensuring that the new components do not disrupt the overall system's operation.

6. Iterate: Continue this process iteratively, replacing additional modules and expanding the modernization effort. The pace of modernization can be adjusted to the organization's needs, budget, and risk tolerance.

Benefits of the Strangler Fig pattern:

1. Incremental Modernization: This pattern allows organizations to modernize their mainframe systems incrementally without the need for a massive, risky, "big bang" migration.

2. Reduced Risk: Because the legacy system remains operational throughout the modernization process, the risk of unexpected issues causing business disruptions is minimized.

3. Lower Cost: Costs are spread out over time rather than requiring a large upfront investment, making it financially more manageable.

4. Preservation of Business Logic and Data: The core business logic and data in the legacy system remain intact, ensuring continuity of business operations.

5. Flexibility: The organization can adjust the pace of modernization to accommodate changes in business requirements or technological advancements.

The Strangler Fig pattern is a well-regarded approach for modernizing mainframes and other legacy systems. It allows organizations to transition to modern technologies and practices while preserving the value of their existing systems and data.

Tuesday, October 24, 2023

SSIS Packages (overview and example)

Here's an overview of what an SSIS package is and some key concepts:

1. SSIS Package: An SSIS package is a collection of data flow elements, control flow elements, event handlers, parameters, and configurations, which are organized together to perform a specific ETL (Extract, Transform, Load) operation or data integration task. These packages are saved as `.dtsx` files and can be deployed and executed in SQL Server.

2. Data Flow Task: Data flow tasks are the core of most ETL processes in SSIS. They allow you to extract data from various sources, transform it as needed, and load it into one or more destinations. Data flow tasks consist of data sources, data transformations, and data destinations.

3. Control Flow: The control flow defines the workflow and execution order of tasks within an SSIS package. It includes tasks like Execute SQL Task, File System Task, Script Task, and precedence constraints to manage the flow of control.

4. Variables and Parameters: SSIS packages can use variables and parameters to store values, configurations, and input parameters. Variables can be scoped at different levels within a package.

5. Event Handlers: Event handlers allow you to define actions to be taken when specific events occur during package execution, such as success, failure, or warning events.

6. Configuration: SSIS packages can be configured to use different settings in different environments. You can use XML files, environment variables, or SQL Server configurations to manage package configurations.

7. Logging: Logging allows you to track the execution of the SSIS package, capturing information about what happens during runtime, which can be helpful for troubleshooting and auditing.

8. Deployment: After designing and testing your SSIS packages, you can deploy them to SQL Server, where they can be scheduled for execution and managed using SQL Server Agent or other scheduling tools.

SSIS is commonly used for tasks like data migration, data warehousing, data cleansing, and more. It provides a visual design interface in SQL Server Data Tools (formerly BIDS - Business Intelligence Development Studio) for building packages, and it supports a wide range of data sources and destinations.

Keep in mind that the specifics of SSIS may change over time with new versions and updates. Therefore, it's important to refer to the documentation and resources for the version of SQL Server you are working with for the most up-to-date information.

SSIS Package Example:

Here is a simple example of an SSIS package that demonstrates a common ETL (Extract, Transform, Load) scenario. In this example, we'll create an SSIS package that extracts data from a flat file, performs a basic transformation, and loads the data into a SQL Server database table.

Step 1: Create a New SSIS Package

1. Open SQL Server Data Tools (SSDT).

2. Create a new Integration Services Project.

3. In the Solution Explorer, right-click on the "SSIS Packages" folder, and choose "New SSIS Package" to create a new SSIS package.

Step 2: Configure Data Source

1. Drag and drop a "Flat File Source" from the SSIS Toolbox onto the Control Flow design surface.

2. Double-click the "Flat File Source" to configure it.

- Choose a flat file connection manager (or create a new one).

- Select the flat file and configure the column delimiter, text qualifier, etc.

Step 3: Configure Data Transformation

1. Drag and drop a "Derived Column" transformation from the SSIS Toolbox onto the Data Flow design surface.

2. Connect the output of the "Flat File Source" to the "Derived Column" transformation.

3. Double-click the "Derived Column" transformation to add a new derived column. For example, you can concatenate two columns or convert data types.

Step 4: Configure Data Destination

1. Drag and drop an "OLE DB Destination" from the SSIS Toolbox onto the Data Flow design surface.

2. Connect the output of the "Derived Column" transformation to the "OLE DB Destination."

3. Double-click the "OLE DB Destination" to configure it.

- Choose an existing OLE DB connection manager (or create a new one).

- Select the target SQL Server table where you want to load the data.

Step 5: Run the SSIS Package

1. Save the SSIS package.

2. Right-click on the package in the Solution Explorer and choose "Execute" to run the package.

Step 6: Monitor and Review Execution

You can monitor the execution of the SSIS package in real-time. Any errors or warnings will be reported in the SSIS execution logs.

This is a basic example of an SSIS package. In a real-world scenario, you might have more complex transformations, multiple data sources and destinations, error handling, and more sophisticated control flow logic.

The SSIS package can be saved, deployed, and scheduled for execution using SQL Server Agent or other scheduling mechanisms, depending on your organization's requirements.

Remember that SSIS is a versatile tool, and the specifics of your package will depend on your data integration and transformation needs.

Monday, October 23, 2023

SQL Server Calculate Distance (Latitude & Latitude)

```sql

DECLARE @lat1 FLOAT = 52.5200; -- Latitude of the first point

DECLARE @lon1 FLOAT = 13.4050; -- Longitude of the first point

DECLARE @lat2 FLOAT = 48.8566; -- Latitude of the second point

DECLARE @lon2 FLOAT = 2.3522; -- Longitude of the second point

DECLARE @R FLOAT = 6371; -- Earth's radius in kilometers

-- Convert degrees to radians

SET @lat1 = RADIANS(@lat1);

SET @lon1 = RADIANS(@lon1);

SET @lat2 = RADIANS(@lat2);

SET @lon2 = RADIANS(@lon2);

-- Haversine formula

DECLARE @dlat FLOAT = @lat2 - @lat1;

DECLARE @dlon FLOAT = @lon2 - @lon1;

DECLARE @a FLOAT = SIN(@dlat / 2) * SIN(@dlat / 2) + COS(@lat1) * COS(@lat2) * SIN(@dlon / 2) * SIN(@dlon / 2);

DECLARE @c FLOAT = 2 * ATN2(SQRT(@a), SQRT(1 - @a));

DECLARE @distance FLOAT = @R * @c;

-- Result in kilometers

SELECT @distance AS DistanceInKilometers;

```

In this example, we have two sets of latitude and longitude coordinates (lat1, lon1) and (lat2, lon2). The Haversine formula is used to calculate the distance between these two points on the Earth's surface, and the result is in kilometers.

You can modify the values of `@lat1`, `@lon1`, `@lat2`, and `@lon2` to calculate the distance between different pairs of coordinates. The result will be the distance between the two points in kilometers.

Sunday, October 22, 2023

Radians

Radians are a unit of measurement used to quantify angles in geometry and trigonometry. One radian is defined as the angle subtended at the center of a circle by an arc that is equal in length to the radius of the circle. In other words, if you were to wrap a string around the circumference of a circle, and that string is the same length as the radius of the circle, the angle between the two ends of the string would be one radian.

Radians are commonly used in mathematical and scientific calculations involving angles because they have certain advantages over degrees. In radians, the relationship between the length of an arc, the radius, and the angle is straightforward: the angle in radians is equal to the arc length divided by the radius. This makes radians a more natural choice for calculus and trigonometry, as it simplifies many mathematical formulas and calculations involving angles. There are 2π radians in a full circle, which is equivalent to 360 degrees.

SQL Server Convert Rows to Columns (Pivot)

Friday, October 20, 2023

Creating a Salesforce Trigger to Update Records

Here's a step-by-step guide:

Make sure you have the necessary permissions and access to Salesforce setup.

- From Salesforce Setup, type "Apex Triggers" in the Quick Find box.

- Click on "Apex Triggers."

- Click the "New Trigger" button.

- Choose the object you want to create the trigger for (e.g., `Opportunity`).

- Name your trigger (e.g., `UpdatePersonnelRecordTrigger`).

In the trigger, you'll write Apex code that specifies when the trigger should fire and what it should do.

trigger UpdatePersonnelRecordTrigger on Opportunity (after update) {

// Create a set to store Personnel Record IDs to update

Set<Id> personnelIdsToUpdate = new Set<Id>();

// Iterate through the updated Opportunities

for (Opportunity opp : Trigger.new) {

if (opp.StageName == 'Closed Won') {

// Add the related Personnel Record ID to the set

personnelIdsToUpdate.add(opp.Personnel__c);

}

}

// Query the Personnel Records to be updated

List<Personnel__c> personnelToUpdate = [SELECT Id, FieldToUpdate__c FROM Personnel__c WHERE Id IN :personnelIdsToUpdate];

// Update the Personnel Records

for (Personnel__c personnel : personnelToUpdate) {

personnel.FieldToUpdate__c = 'New Value'; // Update the field as needed

}

// Save the updated Personnel Records

update personnelToUpdate;

}

```

- Before deploying, it's important to write unit tests to ensure the trigger works as expected.

- Once your trigger is ready, deploy it to your Salesforce environment.

- After deploying, make sure the trigger is active for the desired object (e.g., `Opportunity`).

Wednesday, October 18, 2023

SQL Server ROLLUP

Saturday, October 14, 2023

SQL Server CASE Statement

Friday, October 13, 2023

Calculate Day Of Week in SQL Server

Day Of Week As String SQL Server

Thursday, October 12, 2023

About Data Scraping

The process of data scraping typically involves the following steps:

1. Sending HTTP Requests: Scraping tools or scripts send HTTP requests to specific URLs, just like a web browser does when you visit a website.=

2. Downloading Web Pages: The HTML content of the web pages is downloaded in response to the HTTP requests.

3. Parsing HTML: The downloaded HTML is then parsed to extract the specific data of interest, such as text, images, links, or tables.

4. Data Extraction: The desired data is extracted from the parsed HTML. This can involve locating specific elements in the HTML code using techniques like XPath or CSS selectors.

5. Data Transformation: The extracted data is often cleaned and transformed into a structured format, such as a CSV file, database, or JSON, for further analysis.

Data scraping can be used for a wide range of purposes, including:

- Competitive analysis: Gathering data on competitors' prices, products, or strategies.

- Market research: Collecting data on market trends, customer reviews, or product information.

- Lead generation: Extracting contact information from websites for potential sales or marketing leads.

- News and content aggregation: Gathering news articles, blog posts, or other content from various sources.

- Price monitoring: Keeping track of price changes for e-commerce products.

- Data analysis and research: Collecting data for research and analysis purposes.

It's important to note that while data scraping can be a valuable tool for data collection and analysis, it should be done responsibly and in compliance with legal and ethical considerations. Many websites have terms of service that prohibit scraping, and there may be legal restrictions on the types of data that can be collected. Always respect website terms and conditions, robots.txt files, and applicable data protection laws when performing data scraping.

Data Security Guidelines

1. Understand Data Classification:

- Identify and classify data based on its sensitivity and importance. This can include categories like public, internal, confidential, and restricted.

2. Access Control:

- Implement strict access controls to ensure that only authorized individuals can access sensitive data. Use strong authentication methods, like two-factor authentication (2FA).

3. Data Encryption:

- Encrypt data both in transit and at rest. This includes using protocols like HTTPS and encrypting stored data with strong encryption algorithms.

4. Regular Updates and Patch Management:

- Keep all software, including operating systems and applications, up to date with security patches and updates to protect against known vulnerabilities.

5. Security Policies:

- Develop and enforce data security policies and procedures. These should include guidelines for data handling, retention, and disposal.

6. Employee Training:

- Train employees on data security best practices. Ensure they understand the risks and their role in protecting sensitive data.

7. Backup and Recovery:

- Regularly back up data and test data recovery processes. This can help in case of data loss due to breaches or other incidents.

8. Firewalls and Intrusion Detection Systems:

- Implement firewalls and intrusion detection systems to monitor and protect your network from unauthorized access.

9. Vendor Security Assessments:

- If you use third-party vendors or cloud services, assess their data security practices to ensure your data remains secure when in their custody.

10. Incident Response Plan:

- Develop an incident response plan to address data breaches or security incidents. This should include steps to contain, investigate, and mitigate the impact of an incident.

11. Data Minimization:

- Collect only the data necessary for your business processes. Avoid storing excessive or unnecessary data.

12. Data Destruction:

- Properly dispose of data that is no longer needed. Shred physical documents and securely erase digital data to prevent data leakage.

13. Regular Auditing and Monitoring:

- Continuously monitor your systems for suspicious activities and conduct regular security audits to identify vulnerabilities.

14. Secure Mobile Devices:

- Enforce security measures on mobile devices, including strong passwords, remote wipe capabilities, and encryption.

15. Physical Security:

- Ensure that physical access to servers and data storage locations is restricted and monitored.

16. Secure Communication:

- Use secure communication channels, such as Virtual Private Networks (VPNs), to protect data during transmission.

17. Privacy Compliance:

- Be aware of and comply with relevant data protection and privacy regulations, such as GDPR, HIPAA, or CCPA, depending on your location and industry.

18. Regular Security Awareness Training:

- Conduct ongoing security awareness training for employees to keep them updated on the latest threats and security best practices.

19. Logging and Monitoring:

- Maintain detailed logs of system activities and regularly review them for signs of suspicious or unauthorized access.

20. Data Security Culture:

- Promote a culture of data security within your organization, making it a shared responsibility from top management down to every employee.

Remember that data security is an ongoing process, and it requires a combination of technology, policies, and education. Tailor these guidelines to the specific needs of your organization and regularly update them as new threats emerge or regulations change.

Tuesday, October 10, 2023

How to use SOUNDEX in SQL Server

1. Syntax:

```sql

SOUNDEX (input_string)

```

- `input_string`: The string you want to convert into a SOUNDEX code.

2. Example:

Let's say you have a table called `names` with a column `name` and you want to find all the names that sound similar to "John." You can use the SOUNDEX function like this:

```sql

SELECT name

FROM names

WHERE SOUNDEX(name) = SOUNDEX('John');

```

This query will return all the names in the `names` table that have the same SOUNDEX code as "John."

3. Limitations:

- SOUNDEX is primarily designed for English language pronunciation and may not work well for names from other languages.

- It only produces a four-character code, so it may not be precise enough for all use cases.

- SOUNDEX is case-insensitive.

4. Alternative Functions:

- `DIFFERENCE`: You can use the `DIFFERENCE` function to calculate the difference between two SOUNDEX values, which can help you find names that are similar but not identical in pronunciation.

```sql

SELECT name

FROM names

WHERE DIFFERENCE(SOUNDEX(name), SOUNDEX('John')) >= 3;

```

In the example above, a difference of 3 or higher indicates a reasonable similarity in pronunciation.

5. Indexing: If you plan to use SOUNDEX for searching in large tables, consider indexing the SOUNDEX column to improve query performance.

6. Considerations: Keep in mind that SOUNDEX is a relatively simple algorithm, and it may not always provide accurate results, especially for names with uncommon pronunciations or non-English names. There are more advanced phonetic algorithms and libraries available for more accurate phonetic matching, such as Double Metaphone, Soundex, and others.

Remember that while SOUNDEX can be a useful tool for certain scenarios, it may not be suitable for all cases, and you should evaluate your specific requirements before using it in your SQL queries.

The Art of Data Analysis

The Significance of Data Analysis:

Data analysis is at the heart of decision-making in today's world, guiding business strategies, influencing healthcare decisions, and informing government policies. Its significance lies in its capacity to unlock the latent potential within datasets, transforming raw information into actionable knowledge. The insights gleaned from data analysis can optimize processes, reduce costs, improve customer experiences, and grant organizations a competitive advantage.

The Art of Data Collection:

The journey of data analysis commences with data collection, a process that demands precision and forethought. Data can originate from diverse sources, such as surveys, sensors, social media, and transaction records. The art of data collection entails selecting the right data to gather, ensuring its accuracy, and safeguarding its integrity. Inaccurate or incomplete data can lead to erroneous analyses, underscoring the pivotal role data collection plays in the art of data analysis.

Data Cleaning and Preprocessing:

Raw data is rarely pristine; it often harbors errors, outliers, and missing values. The art of data analysis includes data cleaning and preprocessing, vital steps that refine data quality and reliability. Analysts must employ creativity and problem-solving skills as they grapple with issues like missing data and outliers, deciding on appropriate data transformation techniques, and selecting statistical tools for analysis.

Exploratory Data Analysis (EDA):

Exploratory data analysis serves as the canvas upon which the art of data analysis is painted. This stage involves generating descriptive statistics, visualizations, and graphs to gain an initial grasp of data's patterns and characteristics. EDA encourages analysts to think critically and creatively, enabling them to uncover hidden relationships, identify anomalies, and formulate hypotheses.

The Power of Visualization:

Data visualization is the artistry within data analysis, where raw numbers are transformed into captivating narratives. Utilizing visualizations, such as scatterplots, bar charts, and heatmaps, analysts convey their findings effectively. The selection of appropriate visualization techniques and crafting aesthetically pleasing representations necessitates both technical expertise and an artistic eye. Proficient visualizations engage the audience, rendering complex data accessible and comprehensible.

Statistical Analysis and Machine Learning:

The art of data analysis seamlessly integrates classical statistical techniques and modern machine learning methods. Statistical analysis furnishes a robust framework for hypothesis testing, parameter estimation, and the extraction of meaningful conclusions from data. On the other hand, machine learning empowers analysts to construct predictive models, classify data, and discern intricate patterns often imperceptible to the human eye.

Interpretation and Communication:

Translating data insights into actionable recommendations is a pivotal facet of data analysis. Analysts must possess the ability to communicate their findings effectively to stakeholders, regardless of their technical expertise. This necessitates not only explaining results but also providing context and guidance on utilizing insights for informed decisions.

Ethical Considerations:

The art of data analysis is not devoid of ethical considerations. Analysts grapple with issues related to privacy, bias, and the responsible handling of data. A commitment to fairness, transparency, and the ethical treatment of sensitive information is imperative.

Challenges in Data Analysis:

While data analysis offers immense potential, it is not without challenges. Some of the key challenges include:

Data Quality: Ensuring data accuracy and integrity is an ongoing battle. Analysts often spend a significant portion of their time cleaning and preprocessing data to remove errors and inconsistencies.

Data Volume: The explosion of data in recent years, often referred to as "big data," presents challenges in terms of storage, processing, and analysis. Analysts must employ specialized tools and techniques to handle large datasets effectively.

Data Variety: Data comes in various formats and structures, including structured, semi-structured, and unstructured data. Dealing with diverse data sources requires adaptability and expertise in different data handling methods.Data Privacy: As data analysis involves the handling of personal and sensitive information, privacy concerns have grown. Analysts must navigate legal and ethical considerations to protect individuals' data.

Bias and Fairness: Biases in data, algorithms, or analysis techniques can lead to unfair or discriminatory outcomes. Ensuring fairness and mitigating bias in data analysis is a critical ethical concern.

Interpretation Challenges: Data analysis often involves making sense of complex patterns and correlations. Misinterpretation can lead to erroneous conclusions, emphasizing the importance of expertise and domain knowledge.

Data Security: Protecting data from breaches and unauthorized access is vital. Security measures are essential to safeguard sensitive information during the analysis process.

The Expanding Role of Data Analysis:

The art of data analysis is not limited to any single industry or domain; its scope continues to expand. Here are a few areas where data analysis has made a profound impact:

Business and Marketing: Data analysis drives marketing strategies, customer segmentation, and product development. It enables companies to optimize pricing, identify market trends, and enhance customer experiences.

Healthcare: Data analysis plays a pivotal role in patient care, disease prediction, drug discovery, and healthcare system optimization. It helps in identifying health trends, personalized medicine, and early disease detection.

Finance: In the financial sector, data analysis aids in risk assessment, fraud detection, algorithmic trading, and portfolio management. It provides insights for investment decisions and regulatory compliance.

Environmental Science: Data analysis helps monitor environmental changes, climate patterns, and the impact of human activities on ecosystems. It informs policies for sustainability and conservation.

Social Sciences: Researchers use data analysis to study human behavior, demographics, and societal trends. It informs public policy, social programs, and academic research.

Sports Analytics: Data analysis has transformed sports by providing insights into player performance, strategy optimization, and fan engagement. It has become a game-changer in professional sports.

Conclusion:

The art of data analysis is a harmonious fusion of science, creativity, and critical thinking. It empowers us to harness the power of data to solve complex problems, make informed decisions, and drive innovation. In our data-rich world, mastering the art of data analysis is not just a skill but also a responsibility. It enables us to unlock the potential of data for the betterment of society.

Data analysis is an art that continually evolves, shaping our understanding of the world and propelling progress in nearly every facet of human endeavor. As we navigate the vast landscape of data, we must uphold ethical standards, champion fairness, and utilize data analysis as a force for good. In doing so, we will continue to unveil the insights that drive innovation, inform policy, and transform our modern world. The art of data analysis is, indeed, a masterpiece in the making, waiting to be painted with each new dataset and each fresh perspective.